TIL Multiclass Classification using the One-vs-all method permalink

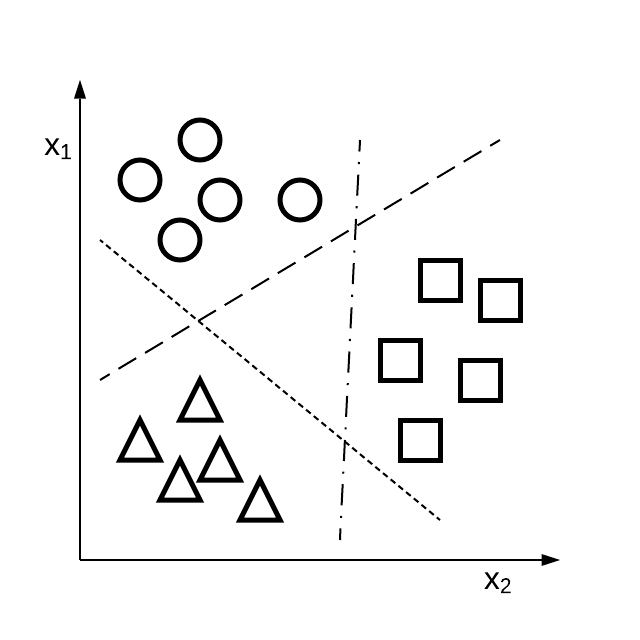

Multiclass classification can be achieved by breaking the problem up into multiple binary classification problems. This is called the one-vs-all or one-vs-rest method. You try to predict the probability that each individual discrete case is the correct, “positive” case for your given inputs, treating the rest of the cases as one combined “negative” case. Then pick the case that has the highest probablity.

The number of binary classification sub-problems you’d break your multiclass classification problem into depends on the number of cases you’re trying to predict. If you have 3 target cases, you’d solve for 3 different binary classification problems with positive cases for each of your three target cases.

I’m wondering how important choosing truly discrete cases is in this type of problem. For example, let’s say I’m trying to predict the weather and have the cases: Sunny, Cloudy, Rainy, Snowy, and Windy. As a Chicagoan, I know it can be both Sunny and Windy. It can also be both Cloudy and Snowy. I suspect that choosing the right target cases is as important as the math behind this type of prediction.